AI is now better than humans at prompt engineering.

AI is now better than humans at prompt engineering.

The Rise of Automated Prompt Engineering: Let AI Take the Wheel

Are you ready to embark on a journey into the world of prompt engineering? The skill of crafting the perfect input phrase for generative AI programs like OpenAI’s ChatGPT might soon be revolutionized by automation. Yes, you heard it right! Automation could take prompt engineering to the next level through the use of large language models.

In a recent paper by Google’s DeepMind unit, researchers Chengrun Yang and his team introduced OPRO, a program that automates the process of finding the most effective prompt for large language models. This innovative approach saves valuable time and effort by allowing AI programs to perform trial and error on their own, generating a multitude of prompts until the best one is found.

The heart of OPRO lies in its algorithm called “Meta-Prompt.” This clever algorithm analyzes previous prompts to gauge their effectiveness in solving specific tasks. Based on this analysis, Meta-Prompt generates multiple new prompts to find the optimal one. It’s like having a person at the keyboard, typing out different possibilities based on past experiences. The beauty of Meta-Prompt is that it can be applied to any large language model, whether it’s GPT-3, GPT-4, or Google’s PaLM 2.

One of the remarkable aspects of OPRO is its ability to optimize prompts for various tasks. Instead of explicitly defining the ideal state for optimization, Yang and his team employ large language models to articulate the ideal state in natural language. This allows the AI program to adapt to dynamic requests for optimization across different tasks. In essence, large language models now have the power to optimize prompts, not just solve the problems they were originally designed for.

The initial experiments with OPRO focused on simpler tasks, such as linear regression. Surprisingly, OPRO was able to find solutions to these mathematical problems simply by generating prompts. In fact, OPRO’s prompts achieved comparable results to those of specialized solvers, even though the language models were not explicitly programmed for such tasks. This discovery opens up new possibilities for prompt optimization and emphasizes the effectiveness and versatility of large language models.

Prompt optimization has long been recognized as a crucial aspect of AI development. Earlier this year, Microsoft proposed “Automatic Prompt Optimization,” which involved editing existing prompts to improve their effectiveness. Yang and his team took this a step further with Meta-Prompt, creating entirely new prompts based on past prompts rather than merely editing them. This novel approach resulted in the generation of more optimal prompts, surpassing the effectiveness of human-designed prompts.

To truly gauge the capabilities of Meta-Prompt, Yang and his team tested the algorithm on benchmark evaluations known for their dependency on precise prompts. One such evaluation is “GSM8K,” a series of grade school math word problems introduced by OpenAI. The results were impressive, demonstrating that the automatically generated prompts performed better than those crafted by hand. In fact, Meta-Prompt’s prompts outperformed human-designed prompts on GSM8K and Big-Bench Hard evaluations by a significant margin, sometimes exceeding 50%.

Meta-Prompt’s ability to construct intricate prompts was evident in its performance on complex reasoning tasks. For example, it successfully tackled the “temporal_sequence” prompt, which required predicting the possible time a person could have gone to a place based on given information. The generated prompts provided detailed step-by-step instructions, showcasing Meta-Prompt’s capacity to handle complex scenarios with finesse.

While Meta-Prompt has proven to be a game-changer in the field of prompt engineering, there is still room for improvement. The algorithm currently struggles to extrapolate from negative examples. Researchers attempted to incorporate error cases into the prompts, but the results were not significant enough to allow the language models to understand the cause of incorrect predictions. This signals that further research is needed to refine the prompt optimization process and ensure comprehensive coverage of all scenarios.

As AI continues to evolve, automated prompt engineering could revolutionize the way we interact with large language models. No longer confined to human-designed prompts, these models possess the ability to generate optimal prompts tailored to specific tasks. The days of manually crafting the perfect input phrase may soon be a thing of the past, as AI takes the wheel and navigates the world of prompt engineering with efficiency and accuracy.

So, if you were considering a future career in prompt engineering, get ready to witness the transformation of this field as automation takes the reins. And who knows, maybe your next programming job will involve fine-tuning the Meta-Prompt algorithm to create even better prompts, paving the way for smarter and more effective AI interactions.

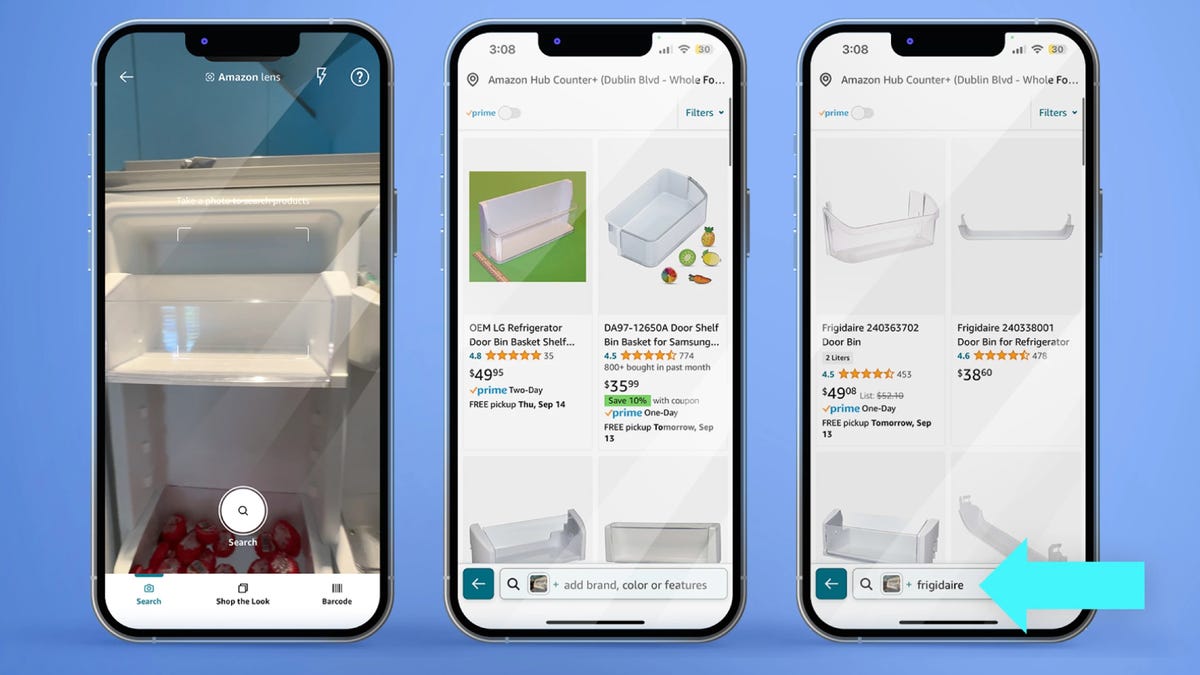

#### Caption: The structure of DeepMind’s Meta-Prompt.

#### Caption: An example of the “meta-prompt” used to prompt the language model to come up with more optimal prompts.

References: – ‘Large Language Models as Optimizers’ – Research Paper by Chengrun Yang and Team, Google DeepMind – ‘7 advanced ChatGPT prompt-writing tips you need to know’ – ZDNet – ‘Extending ChatGPT: Can AI chatbot plugins really change the game?’ – ZDNet – ‘How to access thousands of free audiobooks, thanks to Microsoft AI and Project Gutenberg’ – ZDNet – ‘The best AI image generators: DALL-E 2 and alternatives’ – ZDNet – ‘How does ChatGPT actually work?’ – ZDNet – ’6 AI tools to supercharge your work and everyday life – ZDNet